|

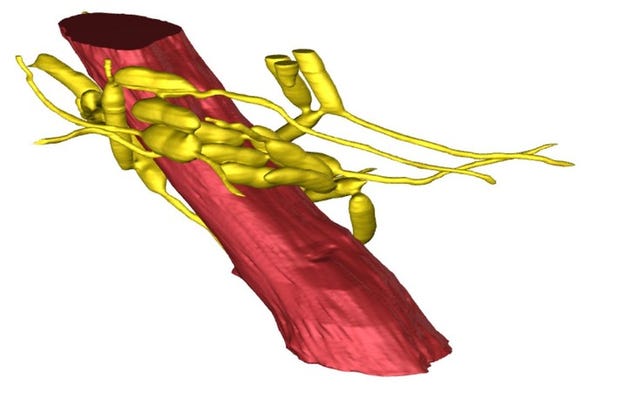

| Credit: Harvard University |

Researchers from the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) have developed a new flow battery that stores energy in organic molecules dissolved in neutral pH water. This new chemistry allows for a

- non-toxic,

- non-corrosive battery

- with an exceptionally long lifetime and

- offers the potential to significantly decrease the costs of production.

A Neutral pH Aqueous Organic–Organometallic Redox Flow Battery with Extremely High Capacity Retention

Eugene S. Beh†‡

† John A. Paulson School of Engineering and Applied Sciences, Harvard University, Cambridge, Massachusetts 02138, United States

‡ Department of Chemistry and Chemical Biology, Harvard University, Cambridge, Massachusetts 02138, United States

# Department of Chemical Science and Technologies, University of Rome “Tor Vergata”, 00133 Rome, Italy

∥ Harvard College, Cambridge, Massachusetts 02138, United States

ACS Energy Lett., 2017, 2 (3), pp 639–644

DOI: 10.1021/acsenergylett.7b00019

Publication Date (Web): February 7, 2017

Copyright © 2017 American Chemical Society

*E-mail: gordon@chemistry.harvard.edu., *E-mail: maziz@harvard.edu.

‡ Department of Chemistry and Chemical Biology, Harvard University, Cambridge, Massachusetts 02138, United States

# Department of Chemical Science and Technologies, University of Rome “Tor Vergata”, 00133 Rome, Italy

∥ Harvard College, Cambridge, Massachusetts 02138, United States

ACS Energy Lett., 2017, 2 (3), pp 639–644

DOI: 10.1021/acsenergylett.7b00019

Publication Date (Web): February 7, 2017

Copyright © 2017 American Chemical Society

*E-mail: gordon@chemistry.harvard.edu., *E-mail: maziz@harvard.edu.

Abstract

| We demonstrate an aqueous organic and organometallic redox flow battery utilizing reactants composed of only earth-abundant elements and operating at neutral pH. The positive electrolyte contains bis((3-trimethylammonio)propyl)ferrocene dichloride, and the negative electrolyte contains bis(3-trimethylammonio)propyl viologen tetrachloride; these are separated by an anion-conducting membrane passing chloride ions. Bis(trimethylammoniopropyl) functionalization leads to ∼2 M solubility for both reactants, suppresses higher-order chemical decomposition pathways, and reduces reactant crossover rates through the membrane. Unprecedented cycling stability was achieved with capacity retention of 99.9943%/cycle and 99.90%/day at a 1.3 M reactant concentration, increasing to 99.9989%/cycle and 99.967%/day at 0.75–1.00 M; these represent the highest capacity retention rates reported to date versus time and versus cycle number. We discuss opportunities for future performance improvement, including chemical modification of a ferrocene center and reducing the membrane resistance without unacceptable increases in reactant crossover. This approach may provide the decadal lifetimes that enable organic–organometallic redox flow batteries to be cost-effective for grid-scale electricity storage, thereby enabling massive penetration of intermittent renewable electricity. |

By modifying the structures of molecules used in the positive and negative electrolyte solutions, and making them water soluble, the Harvard team was able to engineer a battery that loses only one percent of its capacity per 1000 cycles.

“Lithium ion batteries don’t even survive 1000 complete charge/discharge cycles,” said Aziz.

“Because we were able to dissolve the electrolytes in neutral water, this is a long-lasting battery that you could put in your basement,” said Gordon. “If it spilled on the floor, it wouldn’t eat the concrete and since the medium is noncorrosive, you can use cheaper materials to build the components of the batteries, like the tanks and pumps.”

This reduction of cost is important. The Department of Energy (DOE) has set a goal of building a battery that can store energy for less than $100 per kilowatt-hour, which would make stored wind and solar energy competitive to energy produced from traditional power plants.

“If you can get anywhere near this cost target then you change the world,” said Aziz. “It becomes cost effective to put batteries in so many places. This research puts us one step closer to reaching that target.”

“This work on aqueous soluble organic electrolytes is of high significance in pointing the way towards future batteries with vastly improved cycle life and considerably lower cost,” said Imre Gyuk, Director of Energy Storage Research at the Office of Electricity of the DOE. “I expect that efficient, long duration flow batteries will become standard as part of the infrastructure of the electric grid.”

The key to designing the battery was to first figure out why previous molecules were degrading so quickly in neutral solutions, said Eugene Beh, a postdoctoral fellow and first author of the paper. By first identifying how the molecule viologen in the negative electrolyte was decomposing, Beh was able to modify its molecular structure to make it more resilient.

Next, the team turned to ferrocene, a molecule well known for its electrochemical properties, for the positive electrolyte.

“Ferrocene is great for storing charge but is completely insoluble in water,” said Beh. “It has been used in other batteries with organic solvents, which are flammable and expensive.”

But by functionalizing ferrocene molecules in the same way as with the viologen, the team was able to turn an insoluble molecule into a highly soluble one that could also be cycled stably.

“Aqueous soluble ferrocenes represent a whole new class of molecules for flow batteries,” said Aziz.

The neutral pH should be especially helpful in lowering the cost of the ion-selective membrane that separates the two sides of the battery. Most flow batteries today use expensive polymers that can withstand the aggressive chemistry inside the battery. They can account for up to one third of the total cost of the device. With essentially salt water on both sides of the membrane, expensive polymers can be replaced by cheap hydrocarbons.

This research was coauthored by Diana De Porcellinis, Rebecca Gracia, and Kay Xia. It was supported by the Office of Electricity Delivery and Energy Reliability of the DOE and by the DOE’s Advanced Research Projects Agency-Energy.

With assistance from Harvard’s Office of Technology Development (OTD), the researchers are working with several companies to scale up the technology for industrial applications and to optimize the interactions between the membrane and the electrolyte. Harvard OTD has filed a portfolio of pending patents on innovations in flow battery technology.

ORIGINAL: Daily Accord

Credit: Harvard University

Feb 9, 2017